CUDA PROGRAMMING

What is Cuda

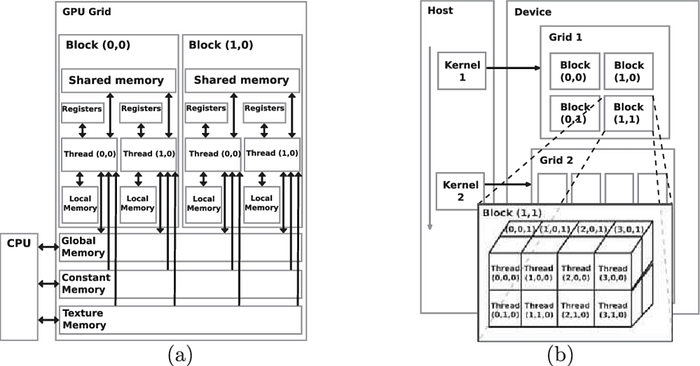

NVIDIA’’s own developed architecture called as CUDA(Computer Unified Device Architecture), it is used for parallel computing and is an application programming interface module. It offers simple APIs for managing devices and memory, and it performs all computations in parallel on GPUs using a grid of threads and blocks. CUDA uses a heterogeneous system, or CPU(Host) and GPU, to offer shared memory and thread synchronisation (Device). The C/C++ languages are supported by CUDA, and NVIDIA’s nvcc compiler is used to compile the programme.

Let’s discuss the CUDA logical hierarchy in greater detail before moving on to CUDA programming.

Why would you utilise a GPU?

The CPU is incredibly powerful and works best for low latency operations when we want a small number of serial tasks to run concurrently. The operating system switches the threads on and off to achieve multiprocessing, which is a slow and expensive procedure, but if we have to run code that demands massively parallel processing, the CPU will take longer to execute as it can only do since threads in CPUs are heavy. When compared to CPU, GPU have considerably higher computational performance and bandwidth. For parallel data processing, GPU is superior. They work better when working with big amounts of data and performing little operations like floating point arithmetic. The best technique to implement parallelism in programming is with a GPU.

Terminology

Host: The system’s available CPU is the host. Host memory refers to system memory (D RAM) connected to the host.

Devices: A GPU is a device, therefore GPU memory is referred to as device memory.

Kernel: A single thread in the GPU executes the kernel function.

Kernel launch: This is when the CPU tells the GPU to run code concurrently.

Execution Configuration: How many threads are run on the GPU and how many there are overall.

NVDIA graphics cards have a floating point unit called CUDA cores that is capable of performing floating point maps.

Workflow

Let us see the work flow-

Transfer from host to device: Memory is allotted to the device memory using cudaMalloc. CudaMemcpy is used to copy data from host memory to the target device. Device and host communication occurs across the PCI bus.

Kernel-load: Load the GPU programme, run it, and cache data locally for performance.

Transfer from device to host: Using cudaMemory, copy the outcomes from the device memory to the host memory. Using cudaFree, the memory is erased from the hardware.

Let us do the basic program in cuda

#include <cuda.h>

__global__ void Cuda_programming() {

printf("Cuda programming\n");

}

int main() {

Cuda_programming<<<1,1>>>();

cudaDeviceSynchronize();

return 0;

}- The phrase “__global__: is a” denotes that the function is called from the host and executed on the GPU (CPU). The kernal code is defined.

- Cuda_programming<<<1,1>>(): The kernal launch is denoted by the symbol

<<<M,T>>>.For code that contains the symbol __global__, it must be called. M thread blocks are arranged in a grid once the kernel starts. There are T parallel threads in each thread block. - cudaDeviceSynchronize(): The cuda code starts asynchronously, thus the host might not always wait for the device to finish running it. The cudaDeviceSyncronize waits for the device’s execution to be finished.

After complie the code-

$ nvcc -o Cuda programming Cuda programming.cuThe device code and host code are separated by the NVIDIA compiler. The main function is built with the gcc compiler, while the device code: Cuda_programming is built with the NVDIA compiler.

The software just prints “Cuda programming” It does not demonstrate all of cuda’s capabilities. Cuda programming is only executed in this thread. But let’s first learn a little more theory.

Hierarchy of threads

- Blocks: A block is a group of threads.

- grid: Block collections make up grids.

- A thread is a single process that is executing in a cuda core. A single grid is created by each kernel call.

Inbuilt Variables

- threadIdx.x:By using an internal variable, we may obtain the current thread’s block index.

- blockId.x: We have access to the grid’s current block’s index.

- blockDim.x:Number of threads in the block can be gotten with using inbuilt variable.

- gridDim.x:We can adjust the grid’s size using an internal variable.

let us take the example

Parallelizing vector addition using multithread

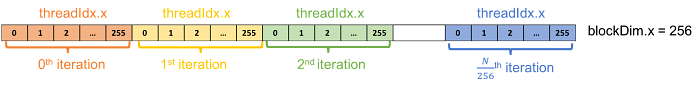

Using a thread block with 256 threads, we will parallelize vector addition in this exercise (vector add.cu). Below is a diagram of the new kernel execution setup.

vector_add <<< 1 , 256 >>> (d_out, d_a, d_b, N);Variables that are built into the kernel by CUDA is used for gaining access to thread data. Out of them, 2 will be used in this experiment threadIdx.x and blockDim.x:

threadIdx.x: it is the thread’s index within the block.

blockDim.x: this is the thread block’s size (number of threads in the thread - block).

The value threadIdx.x: of from the range 0–255 and is blockDim.x: 256 for the vector add() setup.

__global__ void vector_add(float *out, float *a11, float *a12, int a13) {

int index = 0;

int stride = 1

int i = index;

while(i < n)){

out[i] = a11[i] + a12[i];

i += stride;

}

}

int main() {

float *b11, *a12, *out;

float *b12;

b11 = (float*)malloc(sizeof(float) * N);

a12 = (float*)malloc(sizeof(float) * N);

out = (float*)malloc(sizeof(float) * N);

int i=0;

while(i<N){

b11[i] = 0.1f;

a12[i] = 0.2f;

i++;

}

cudaMalloc((void**)&b12, sizeof(float) * N);

cudaMemcpy(b12, b11, sizeof(float) * N, cudaMemcpyHostToDevice);

vector_add<<<1,256>>>(b11, a12, out, N);

cudaFree(b12);

free(b11);

free(a12);

free(out);

return 0;

}Thread kernal configuration is passed through M and N. The 32-bit kernel is executed via CUDA. The thread(threadIdx) value in this case ranges from 1 to 256. And 256 is the value of block(blockDim.x). If we set 1,1>>>, then stride and index will both be 1. Vector add’s code will then be as follows:

int i=0;

while(i<0){

...

i++;

}In this implementation, the entire arrays are iterated through by a single thread to compute vector addition. Process of addition could potentially be divided over 256 threads,- and calculated simultaneously.

The loop for the k-th thread iterates across an array of a stride of 256 with the help of a loop, starting with the k — th entry. For instance, the addition is computed by the k — th thread of the element which is the k-th in the 0-th — iteration. The addition of the (k+245)- the element is computed by the k-the with the help of the iteration, and so on. The principle is illustrated in the next figure.

Components of GPU

global memory: It is equivalent to CPU RAM. It is accessible to both hosts and devices.

Streaming Multi processor:The system that actually performs calculations. Its CUDA cores, to use simple language.

Multiple parallel processors known as SMs are found in CUDA GPUs. Each SM has several parallel processors and is capable of managing several concurrent thread blocks. Let’s use that to our advantage. The block size of 256 has already been established. Finding the size of the grid we split is now necessary. by block size, the number of elements.

int BLOCKS_NUM = (N + BLOCK_SIZE — 1) / BLOCK_SIZE;

vector_add<<<BLOCKS_NUM, BLOCK_SIZE>>>(h_a, b, out, N);Advantages:

- Simple to use: The CUDA API enables us to use GPU without the need for in-depth GPU understanding. Additionally, given that CUDA is just C with NADIA extensions. It can be used to existing code bases with little to no code modification.

- Performance: NVDIA develops the API as well as the hardware. This enables them to fine-tune the API to extract the best possible performance from your GPU.

Disadvantages:

- Privately held software: NVDIA, which has a closed architecture, is the proprietor of CUDA. To run in the system, we require NVDIA hardware and CUDA sdk tools.

- Recursion is only possible in simple loops; it is difficult to construct and difficult to get away with.